I recently picked up the Old-School Essentials adventure Xanadu by Vasili Kaliman, which garnered the coveted rating of "The Best" from Bryce at tenfootpole, as well as a favorable review from Ben over at Questing Beast. I haven't read it 100% through, but having skimmed it I tend to agree with them that it's a pretty great addition to the stable of R-rated "weird fantasy" adventures for Old School D&D. It features some freaky body horror stuff, a fun nightmarish take on the Tooth Fairy, and tons of treasure for intrepid adventurers to recover (if they survive). I probably would be cautious about throwing it into a long-term OSE campaign due to its lethality and a few "gotcha" moments, but it's a slam dunk for a one-shot with a group that will enjoy that sort of thing. Very fun pixel art-styled interior art, too.

Enough of that, though. This post isn't a review; the two reviewers I linked above are some of the best in the business, so go ahead and check their reviews out if you want to read more about the adventure overall. Nope, this post is... a math problem. Because I'm a mad lad who's been away from work for too long and needs to solve some equations to truly feel alive.

The puzzle that wasn't

Near the end of his review, Ben mentions one of his minor gripes with the adventure is that one of the marquee puzzles of the dungeon (required in order to escape the dungeon, so all groups will have to interact with it at some point) isn't so much a puzzle as a "randomized flowchart" where the players have essentially zero control over whether or not they succeed in solving the puzzle.

This puzzle consists of the aforementioned flowchart (pictured below), along with instructions that when a player character attempts to solve the puzzle, they should repeatedly roll a d10, moving from node to node until they reach the "Start", "Uh Oh!", or "Solved" nodes. There are some additional caveats - each full attempt risks wandering monsters, bad things will happen if you hit "Uh Oh!", etc. - but that's the long and short of it. Ben concludes his critique by pointing out that there "really is just a statistical chance that you will end up here or here... so why not just... make them roll a die to see if they end up here or here?"

|

| Xanadu's clock puzzle |

Ben is, of course, completely correct here. The clock puzzle isn't really a puzzle, and the flowchart is functionally completely unnecessary - it's just a bunch of repeated die rolls to eventually land on "good thing", "bad thing", or "try again". With any given attempt, there really is just a statistical chance that you will end up at any given end state of the flowchart. The whole thing could be replaced by a table with 3 entries and a probability for each of the 3 possible results. Well... why not?

Today I'd like to answer the question Ben didn't ask: what actually is the statistical chance that on any given try, you will end up at any given end state of the Xanadu clock puzzle?

Quick note: the rest of the blog post is a bunch of math, albeit (hopefully) presented in a format most folks can more or less follow along with. It's worth noting though that the analytical method I present here isn't just useful for this Xanadu problem - it applies to most any "random flowchart" problem in any arena. That said, if you don't care about the math, scroll to the very bottom of the post for the TL;DR answer.

Markov-ifying Xanadu's clock puzzle

If you've dealt much with probability and/or random processes, you might have recognized (probably faster than I did) that the Xanadu clock puzzle is a textbook example of a discrete Markov chain. For anyone who's not familiar with the term, a discrete Markov chain is a mathematical model for describing a system that:

- Has a set number of possible states

- Transitions from the current state to the next state at regular intervals

- Only cares about what the current state is when deciding what the next state will be - the history is irrelevant, the only thing that matters is the current state

That's about it, for our purposes*. And indeed, our flowchart above is literally just a discrete Markov chain. There are a bunch of nodes, or states... each state (with the exception of the end states) transitions to another state with a set probability... and the state you go to next is entirely dependent on whatever state you're currently in. Let's make this a bit clearer - I'm going to rewrite the flowchart, but this time numbering the states and replacing the die ranges with probabilities (expressed as decimals rather than percentages).

|

| Xanadu's clock puzzle, Markov-ified |

Quick aside... before we go further, I'd like to answer a question I'm sure at least half of you are asking: "why bother with this? Even if you really want to know the answer you can just write a simulation to run 10,000 trials and figure it out." The answer is that math is cool and I think this is a lot more fun than brute-forcing it. As you'll see below, we can calculate an exact answer to the question posed. We don't need to run 10,000 simulations to be sure we have the right answer, we can just get the exact answer. How cool is that? This method is also much less time-consuming... at least if you're not also writing up a detailed explanation of how to do it.

Alright, back to the math. The observant reader will notice 3 major differences between the original flowchart and my Markov-ification of it above.

- I split the "Start" node into two states. This is because when first starting the puzzle, you can transition from that "Start" node to states 2, 3, or 4 - but if you return to the "Start" node after that you don't just continue the flowchart, you have failed to solve the puzzle this attempt and the Referee gets to roll for wandering monsters before you can try again. Functionally, the "Start" node actually hides two different states - the actual start state and the "try again" end state.

- I drew additional loopy arrows from each of the three end states (also known in the lingo as "absorbing" states) back to themselves, with probabilities of 1 (aka 100%). This is basically just a visual representation of the fact that they are terminal states that effectively end the chain. To be a valid Markov chain, every state has to have all the arrows leaving it add up to 1, so adding these loopy arrows satisfies that requirement.

- The original flowchart has the "8" d10 result listed twice going out of state 6, once going up to state 3 and once going down to state 9 (aka "Uh Oh!"). I asked the author on DTRPG and he confirmed that this is a typo. I chose to resolve this typo in the "nicer" way, changing the die range for the transition from state 6 to state 9 from 8-0 to 9-0, aka a probability of 20% instead of 30% - and fortunately, that's what the author did as well, so I don't have to redo this whole post to match v3.1 of the adventure. Success.

The next step in Markov-ifying our state machine is to represent it in matrix form. Matrices are the building blocks of linear algebra, and they're amazing for solving a whole range of mathematical problems, especially those that involve multiplying specific sets of numbers in very specific ways. That's a woefully inadequate summary, but I'll leave the detailed further reading to anyone who's interested and for now will move on with the problem.

Turning this flowchart into a matrix is pretty easy. Each current state gets a row, each future state gets a column, making a square matrix. The value of any given element is the probability that the state in the given row will transition to the state of the given column. This is called the "transition matrix", and it is a complete mathematical representation of the Markov chain (aka it contains all the info contained in the flowchart).

|

| Clock puzzle Markov transition matrix P |

This probably just looks at first glance like a bunch of random numbers, so take a second to compare the matrix to the flowchart and see how we constructed it. Note that the (1,1) entry is 0, because state 1 cannot transition directly to state 1. The (1,2) entry is 0.3, because there is a 30% chance state 1 will transition to state 2. The (3,4) entry is 0.1, because there is a 10% change state 3 will transition to state 4... and so on.

OK. For those of you who have held on this long... here's the super cool part and the reason Markov chains are amazing. If we want to know the probability of being in any given state after N cycles (rolls), all we have to do is multiply the matrix by itself that many times (aka raise it to the Nth power). That's it. Then just look at whatever row corresponds to your starting position (in our case, the 1st row since we always start in state 1) and the column entries give you the probabilities that given that starting position, and after N cycles, you are now in the state corresponding to that column.

Here's an example. Say we want to know where we're likely to be after rolling the d10 5 times. All we have to do is first raise the matrix to the 5th power... (many programming languages and graphing calculators will do matrix math, you can also use one of the myriad of free calculators available online)

EDIT: Microsoft Excel/Google Sheets will also do matrix multiplication and inversion, though you need to make a custom function for exponentiation.

|

| Clock puzzle probabilities after 5 cycles |

Now we look at the first row (since we started in state 1), and we see that after 5 die rolls we have a 46.9% probability of having looped back to the start (column 8), a 17.9% probability of having hit "Uh Oh!" (column 9), a 9.3% probability of having solved the puzzle (column 10), and that the rest of the remaining probability is spread out among the other nodes (aka we are somewhere in the blue nodes and are still rolling after making 5 d10 rolls).

Solving the problem (good enough)

Alright! We can now solve our initial problem, which was to answer the question "what is the probability that any given solution attempt will result in any of the three given end states?" Since we know that after enough cycles we must eventually end up in one of the three absorbing states (note that this isn't true for all Markov chains, but it is true for this particular Markov chain), we could just raise the matrix to some arbitrarily high power (aka until the probabilities of the non-absorbing states go to 0) and check the probability of each absorbing state. Aaaand.... yeah, that would work. It'd work just fine. Let's go ahead and do that.

|

| Clock puzzle probabilities after 100 cycles |

And there we have it. Looking at the 1st row of this matrix (because we always start in state 1), we see that on any given attempt there is a 60.8% probability of looping back to the start, a 25.8% probability of having to roll on the "Uh Oh!" table, and a 13.4% probability of solving the puzzle. In fact, if you are running this adventure and don't think your table would enjoy rolling a d10 over and over again until you spit out a random answer, you can replace the flowchart with a table containing the above probabilities (or an approximation using a d8, see the final section of the post) and get pretty much the exact same results with much less rolling.

Hold on though... I promised you an exact solution, with no need to do a large enough number of trials or whatnot. Haven't I just come up with a method that still requires some trial and error to see when you've gotten a "high enough" power? Well... yes. That's true. Funny story though... it turns out that for an absorbing Markov chain like this there is actually a way to go straight to the exact solution, no multiple tries or high power iteration needed. It's slightly more involved, but still pretty easy. Let's dive in.

Solving the problem (exact solution)

In the interest of brevity (ha), in this section I'm going to more or less go straight through the solution steps without much explanation. For those interested in the details, the wikipedia article on absorbing Markov chains is a pretty decent high-level overview that basically just has the formulas, and this probability book chapter from Dartmouth gives a awesome undergrad-level detailed treatment of the entire subject of Markov chains (absorbing chain stuff starts on page 12 of the PDF).

Alright. Here we go... the first step in finding the exact solution is to make sure the Markov chain is constructed such that all of the absorbing states have the highest numbers, thus arranging our transition matrix so there are 0's in the bottom left quadrant, diagonal 1's in the bottom right quadrant, and some other stuff in the top two quadrants. Note that the quadrants aren't necessarily of equal size, just depends how many absorbing states there are. Fortunately, I knew this was coming so I numbered the states that way from the start, no re-arranging needed. Success! I'll repeat the transition matrix below, this time drawing little dotted lines to show the quadrants and with an additional term showing the names of the quadrants.

|

| Clock puzzle Markov transition matrix P with quadrants shown |

|

The next step is to calculate something the called "fundamental matrix" for this absorbing Markov chain. Take the top left quadrant, also known as Q, and subtract it from a diagonal 1 matrix of the same size (also called an identity matrix because anything you multiply by it ends up the same - like multiplying by 1).

|

| Step 1 of calculating fundamental matrix N |

Now we take the inverse of the matrix above. The inverse A^-1 of any given matrix A is the matrix that yields an identity matrix (diagonal 1's) when you multiply the two together. Actually finding this inverse by hand can be quite tricky, so back to the calculators and... bam. We now have the fundamental matrix, also known as N, for this Markov chain.

|

Fundamental matrix N for this Markov chain

|

For a host of interesting reasons that you can read about in the Dartmouth paper, we can do some incredibly cool stuff with this matrix. For starters... what does this matrix even represent on its own? Well, each row still represents the starting state, and as it turns out, any given column entry is the expected (average) number of times you will visit the state given by that column number before being absorbed. So entry (1,4) tells us that if you start in state 1 (aka the "Start" node), you will on average visit node 4 0.515 times (aka roughly once every other try). Looking at the top row as a whole, we can see that most of the states are visited roughly once every other try, but that the start node and node 3 (aka the one in the middle) are visited roughly once every try. That makes sense given that node 3 is smack dab in the middle and that, well, we always start on node 1.

That's sort of interesting, maybe... but it's not what we wanted. We wanted the exact solution for the probability of ending up in any given absorbing state, and the absorbing states aren't even in this matrix! Right you are. Let's get that answer right quick. Easy. All we have to do is multiply the fundamental matrix N by the top right quadrant of the original matrix, also known as R, which yields an absorption probability matrix B.

|

| Absorption probability matrix B |

Same as always, the row indicates the starting state, so we'll look at row 1 here. The columns are the absorbing states only, which are in the same order as before. Hmm, well whaddaya know? This confirms that indeed, any given attempt has a 60.8% probability of looping back to the start, a 25.8% probability of having to roll on the "Uh Oh!" table, and a 13.4% probability of solving the puzzle - a nice double check for our earlier "good enough" answer. Math is great, yeah?

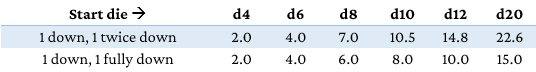

Last thing before we go... how long, exactly, does it take to solve the clock puzzle? Obviously there will be a lot of variance, but can we get an idea of the average number of rolls? Of course we can. The expected (average) number of steps (die rolls) needed to reach an absorbing state, given a certain starting state, is given by the row of the vector t that results from multiplying the fundamental matrix N by a vertical column of 1's, as seen below. Note that multiplying by a vertical column of 1's is the same thing as just adding together all the entries of each row, which makes sense because each entry is the expected (average) number of times you'll visit any given state before being absorbed - so of course the average number of total steps would just be the sum of those individual visits.

|

| Expected # of steps vector t |

And there we have it. On average, any given attempt takes 4.51 die rolls to reach an end state (though there is definitely very high variance here - a successful escape will almost certainly take more rolls than the typical attempt which ends in a return to the start). Since the probability of solving the puzzle on any given try is 13.4%, that means our expected number of tries is 1/.134 = 7.46 and that any given group will roll their d10, on average, 7.46*4.51 = 33.6 times trying to escape this dungeon.

Markov chains, huh... what are they good for?

Alrighty. So what was the point of all this, beyond figuring out how to simplify a somewhat lame "puzzle" that's a fairly small part of an otherwise pretty awesome adventure as a flimsy excuse to give a math lesson on an RPG blog? There are a few reasons why I think this was a worthwhile endeavor:

Markov chains are everywhere

Markov chains, if you know where to look, show up fairly often in RPG products. Off the top of my head there's hex flowers, the innovation track progressions from Magical Industrial Revolution, and of course any sort of "random flowchart" system for randomly navigating between points in a chaotic landscape such as in Silent Titans or Xanadu. If it involves randomly determined travel between a predetermined set of nodes or states, it's a Markov chain.

With that in mind, I think having a bit of knowledge about how Markov chains work and how to do some basic analysis of them can aid any RPG designer who's implementing such a structure in their product. Mathematical literacy is an important tool in the RPG designer's toolbox. Granted, Markov chain analysis isn't quite as important as knowing (for example) that 1d20 gives you a flat distribution and 3d6 gives you a bell curve... but it's in that same ballpark, in the sense that you absolutely can do good RPG design without knowing it, but knowing it will almost certainly make anything you design better.

I don't know if Vasili Kaliman is 100% aware of the overall probabilities his flowchart results in, or if he just tuned it more by trial and error. Either way, I'd like to equip any future flowchart-makers with the tools to quickly and easily tune these sorts of mechanics without needing to do a ton of trial and error that will almost certainly not give you a great sense of how the chain will actually play (due to the small sample size). Instead, just change a few entries in your P matrix, recalculate, and you have your new probabilities - you can spend your playtesting time on something more important instead.

I hope I've shown, too, that despite not being a topic commonly taught (I'm an engineer and I didn't even see them til an elective course in grad school), Markov chains aren't actually that difficult to analyze. While there's a lot of matrices and numbers above, everything I did ultimately just boils down to making a flowchart, moving numbers from that flowchart into a matrix, and plugging that matrix into a calculator. I didn't do any arithmetic in the "background" of this post other than the (I - Q) bit (which was subtraction), everything else was just plug 'n play.

It's fun to solve problems

As previously mentioned, I find solving this sort of problem fun. When I saw Ben comment in his review that there should just be a single die roll to determine which end state they end up at, I not only agreed with him, but was fairly confident I remembered a way to rigorously determine the exact die roll that would be equivalent to the flowchart... so... I did. It's always good to stretch the brain now and again, refresh one's memory on techniques and concepts long forgotten. Even better if that knowledge can be shared and potentially used by other people!

Extra credit

- Try solving the Markov chain with a different resolution of the typo in node 6 - perhaps by removing the arrow back to node 3 entirely. How does this change the overall probabilities? Does it make the flowchart "meaner", and if so, by how much?

- Try solving the Markov chain assuming that you don't exit the chain when returning to Start, but instead keep going until you hit Uh Oh! or Solved. Can you infer what the new absorption probabilities should be before doing any additional matrix math? How does this affect the expected number of tries? What about the expected number of die rolls? The answer may surprise you.

TL;DR / Summary

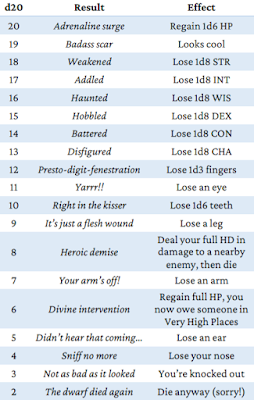

The probabilities

In summary... the Xanadu clock "puzzle" flowchart takes, on average, about 4.5 rolls to reach an end state, will require about 7.5 tries to solve, and is equivalent (plus or minus half a percentage point) to a single d100 roll with the results and ranges shown below. If you want to cut all of the "make multiple die rolls before something happens" out, axe the flowchart and use the single roll instead for each attempt.

|

| Equivalent and approximate probabilities for Xanadu clock puzzle |

If/when I run this adventure, I'll probably use the d8 table instead, it's surprisingly close to the base probabilities and having a d100 table with the uneven breakpoints shown above slightly unsettles me.

Improving the Xanadu clock puzzle

Also, one final note - while I've been fairly critical of the Xanadu clock puzzle in this post, that's mainly because as-designed, it really does boil down to a random determination of end state with the intermediate states having zero effect on gameplay (other than taking up more table time with additional rolls). My complaint isn't that it's a Markov chain - rather, it's that it's a Markov chain that doesn't leverage any of the properties of being a Markov chain and that really should just be a random table.

If, instead, each of the blue nodes had some sort of specific property or effect every time it was visited, the flowchart could be otherwise identical mechanically but add much more flavor. Every die roll would mean something, rather than the players making 5+ die rolls in a row waiting for something to happen. If/when I run this adventure, I might write up an entry for each of the 6 blue nodes - or maybe even 6 separate random tables, one for each node! Project for another day, perhaps.

Thanks for reading! Let me know what you think below. Also don't hesitate to point out if you think I've made an error - I manually entered all of those numbers into the matrices after doing the math, so I absolutely could've mis-entered something (and/or made a fundamental error in the analysis, fingers crossed that isn't the case).

*For any math teachers/profs in the audience, I apologize for my fairly streamlined way of presenting all of this. I'm sure there's all sorts of caveats I'm leaving out and some ways in which my language is less rigorous than it could be. Feel free to clarify if I got something wrong. :P